The Annwvyn Game Engine, and how I started doing VR

Ybalrid

- 8 minutes read - 1627 wordsIf you know me, you also probably know that I’m developing a small C++ game engine, aimed at simplifying the creation of VR games and experiences for “consumer grade” VR systems (mainly the Oculus Rift, more recently the Vive too), called Annwvyn.

The funny question is : With the existence of tools like Unreal Engine 4 or Unity 5, that are free (or almost free) to use, why bother?

There are multiple reasons, but to understand why, I should add some context. This story started in 2013, at a time where you had to actually pay to use Unity with the first Oculus Rift Development Kit (aka DK1), and where UDK (the version of the Unreal Engine 3 you were able to use) was such a mess I wouldn’t want to touch it…

The current VR “revolution” has been started by the presentation by John Carmack at E3 2012 with DOOM with a hacked together VR headset.

An LCD screen, some lenses and a sensor (IMU) held together with a bit of tape on ski goggles.

This prototype hardware was made by some guy called Palmer Luckey, and Carmack basically found about the kit and asked him so send a prototype to Texas (I think they contacted each other via the MTBS3D forum), and patched DOOM 3: BFG to use it, and showed that at E3. (he explain the story many times to vistors of the ID Software booth, search that on YouTube if you want to see the interviews)

That Palmer guy found some partner in crime, and started up “Oculus VR” to develop a VR headset called “the Rift”, and had a very successful kickstarter, then later they offered pre-orders of the first “development kit” for it.

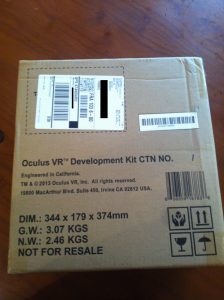

The kit was more or less $300 and I jumped on it after some friends showed me the existence of the project (I wasn’t aware and the kickstarter was finished already). It was at the very start of the year 2013.

They started shipping out that stuff in march, but I finally got mine in June, the very last day of school for that year for me.

By chance it was early in the morning, and I directly took the box to KPS, ESIEA‘s computer science club without having the time to properly unbox it or try it out.

I set this thing up at school. I already had some demo software on my laptop already so it was working right away. I tried this with a bunch of friends. They had more or less comfortable experiences, but everybody was blown away.

Good news : I’m totally insensible from VR’s “Simulator Sickness”. (I don’t get motion sickness, and I have no trouble doing things like reading books in cars). The tracking of the DK1 was only in orientation, with an head/neck model to compensate in translation. The screen inside was genuinely crappy, the image was blurry when you moved your head, but it was doing the job for the time, and was amazing!

So, I spent a few hours with this. Valve just made Team Fortress 2 and Half Life 2 playable “In VR”, and I spent a few multi hours sessions playing as Gordon Freeman in City 17, without problems. (well, the game at the time broke at the part where you had to drive an hovercraft :-P).

After having used this exiting new toy, I was like “Well, I know a bit about 3D modeling (I used to do 3D CAD in “engineering sciences” classes in High School. And I also messed around with Blender since Middle School, so I knew how to make stuff out of polygons). And, being a programmer, I had this in mind:

“I know nothing about computer graphics programing. But I can learn. I want to write code for that thing”

So, I evaluated the “software development” landscape for the Rift prototype, at the time :

- The Unity Game Engine needed a plugin. The plugin system was for the professional version only at the time. Oculus gave a few months of free Unity Pro trial, but I did not want to reliy on proprietary software that I had to pay for

- UDK was free, but the integration was messy and I couldn’t get it to work in a reasonable amount of efforts

Being pretty comfortable with C++, I decided that I will not use one of theses ready made game engines.

I layered a simple outline for what I would need to simulate a simple VR worlds with 3D objects around me in multiple things :

- I need to make a 3D scene with objects inside, and render this properly for the rift, including the head tracking

- Each object should at least have rigid body physics and be submitted to gravity to feel realistic

- Spatialized sound should exist. Object should be able to emit sounds

- I just want to be able to create a 3D scene with a few lines in the main() function

The 1st thing was to do 3D render to the headset. At the time, it was simply seen as an external screen by the system. So all you needed to do was to put a window in the right place with the right content to make it work.

Without any previous experience in programming with OpenGL or Direct3D, I was looking for a library that will gave me a nice abstraction layer between me and the graphics card.

I had came across an “HMD Irrlicht” project on GitHub, and tried to make something work with it. However, I encountered problem with the camera tracking, and Irrlicht looked a bit light for what I wanted to do.

Then I tried out the other “well known open source 3D engine”, called Ogre (Object-Oriented Graphical Rendering Engine), and I was pretty pleased with what I’ve seen.

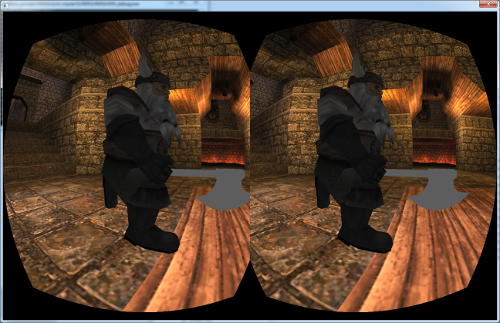

Somebody called Kojack, on the Oculus developers forum (and apparently he’s a pretty active member of the Ogre community) made a simple integration of the Rift with Ogre, and it was working really well, and the code was easy to understand for me :

So, I refactored this in a way I was happy about, and then I started writing a few classes to instantiate and run side by side Audio with OpenAL, physics with Bullet and the 3D rendering. I managed to get something that worked a bit.

The way VR is rendered to HMDs now has been completely changed, and there’s nothing left from this old code. Also, in 3 years I learned a lot about 3D rendering and about the inner workings of the Ogre engine.

The main idea was that I wanted to just have to manipulate one object, and have everything updated. So I wrote a few classes that did just that.

Then I thought that putting all of this together was hard enough do to once. So I removed the main function of my program and started to look into how to build a dynamic library with Visual Studio. After that, I was thinking:

“Hey, I can totally make a game engine out of this”

So, after that, I needed a name.

I wanted something that could represent “imaginary worlds”, rooted in history or mythology. I’m a bit into Celtic stuff, and I came across the word “Annwvyn” (the spelling vary, but this is the coolest-looking form, in my humble opinion :-P)

Annwvyn is basically, the “other world” from ancient Celts from Wales. It’s also somewhat tied to Arthurian legends, so it has everything to please me 😉

I spent most of my summer making this thing works, and at the end of august, I published the source code on GitHub here.

Through the “technical and scientific projects” of my engineering grad school, I found a way to make Annwvyn the backbone of my projects, to work on it on school hours. (but I must admit, even when I’m supposed to do other things, I tend to work on that… ^^”

Bellow are some videos (playlist) demonstrating various things running (or not working, but funny) in Annwvyn.

The engine is still in early phases of development. It’s now running on Oculus CV1 and theoretically Vive too (it runs with OpenVR/SteamVR. I did not get the chance to try it out on a Rift)

The default way it represent the player’s position is focused on a FPS like control scheme. This will be expanded in the near future. For now the engine is not feature rich enough to power “real games”. I’m actually working on a few samples/demo that will permit to show what the engine is actually capable. I’m planing to also develop some mini-games or experiences using Annwvyn that will be maintained compatible with the engine itself.

The engine lacks proper documentation. I’m doing some effort on this on a wiki site at wiki.annwvyn.org and the code is commented in a way that I can generate an explicit documentation for the API via Doxygen on api.annwvyn.org.

Anyway, this is a student project, it’s not a real usable piece of software. It’s not properly documented. It’s not properly tested. It’s in an on-going development state so the API is not stable. It still lacks fundamental features. But it has the advantage to be the lightest VR development framework I can run on my laptop or on school’s computers without having real issues.

And, well, it’s just my pet project… ^^”